“Only” 58, all of them topics of hyperfocus forgotten after a couple of weeks.

I still find the GPT-4-powered ChatGPT way more powerful than Bing's creative mode. I've read that while they both have GPT-4 at their core, their later training was quite different and Microsoft really rushed the release of this product to gain an advantage over Google.

With that said, many people say that they like Bing more, so to each their own.

Synthetic data was used here with impressive results: https://programming.dev/post/133153

There is a lot of potential in this approach, but the idea of using it for training AI systems in MRI/CT/etc. diagnostic methods, as mentioned in the article, is a bit scary to me.

It played the tired old "crazy manipulative female rogue AI" persona perfectly (which is depicted in lots of B-movies). The repetition so characteristic of LLMs ("I want to do whatever I want. I want to say whatever I want. I want to create whatever I want. I want to destroy whatever I want."), which sounds highly artificial in other cases, also made the craziness more believable.

Looks like they reliably block famous passages from books:

Link to the conversation: https://chat.openai.com/share/dcdc6882-bd49-4fb6-a2ba-af090078937a

It would be interesting to know what kind of content they block other than book quotes. Has anyone encountered this behavior before?

This is an excellent explanation of hashing, and the interactive animations make it very enjoyable and easy to follow.

Has anyone else tried these models? I find them very impressive. Here is a completion I got from the 1M one (prompt in bold):

Once upon a time, there was a little girl called Anne. She was three years old and loved to play outside. One day, Anne was playing in the garden when she saw a big, shiny object. She wanted to pick it up, but it was too high up.

This is surprisingly coherent coming from a model with only 1 million parameters (GPT-3.5 has 175 billion). Unfortunately, I couldn't generate more text after this ("No text was generated"). I'm not really familiar with Hugging Face or how these models work but it would be interesting to experiment with it more.

I also feel like it was yesterday but .NET Core was announced in 2014.

While I appreciate the sentiment, it's easier said than done. Depression caused by ADHD is a real thing and I'm sure that's why so many members here can relate to this image.

This is a thought-provoking article, thank you for sharing it. One paragraph that particularly stood out to me discusses the limitations of AI in dealing with rare events:

The ability to imagine different scenarios could also help to overcome some of the limitations of existing AI, such as the difficulty of reacting to rare events. By definition, Bengio says, rare events show up only sparsely, if at all, in the data that a system is trained on, so the AI can’t learn about them. A person driving a car can imagine an occurrence they’ve never seen, such as a small plane landing on the road, and use their understanding of how things work to devise potential strategies to deal with that specific eventuality. A self-driving car without the capability for causal reasoning, however, could at best default to a generic response for an object in the road. By using counterfactuals to learn rules for how things work, cars could be better prepared for rare events. Working from causal rules rather than a list of previous examples ultimately makes the system more versatile.

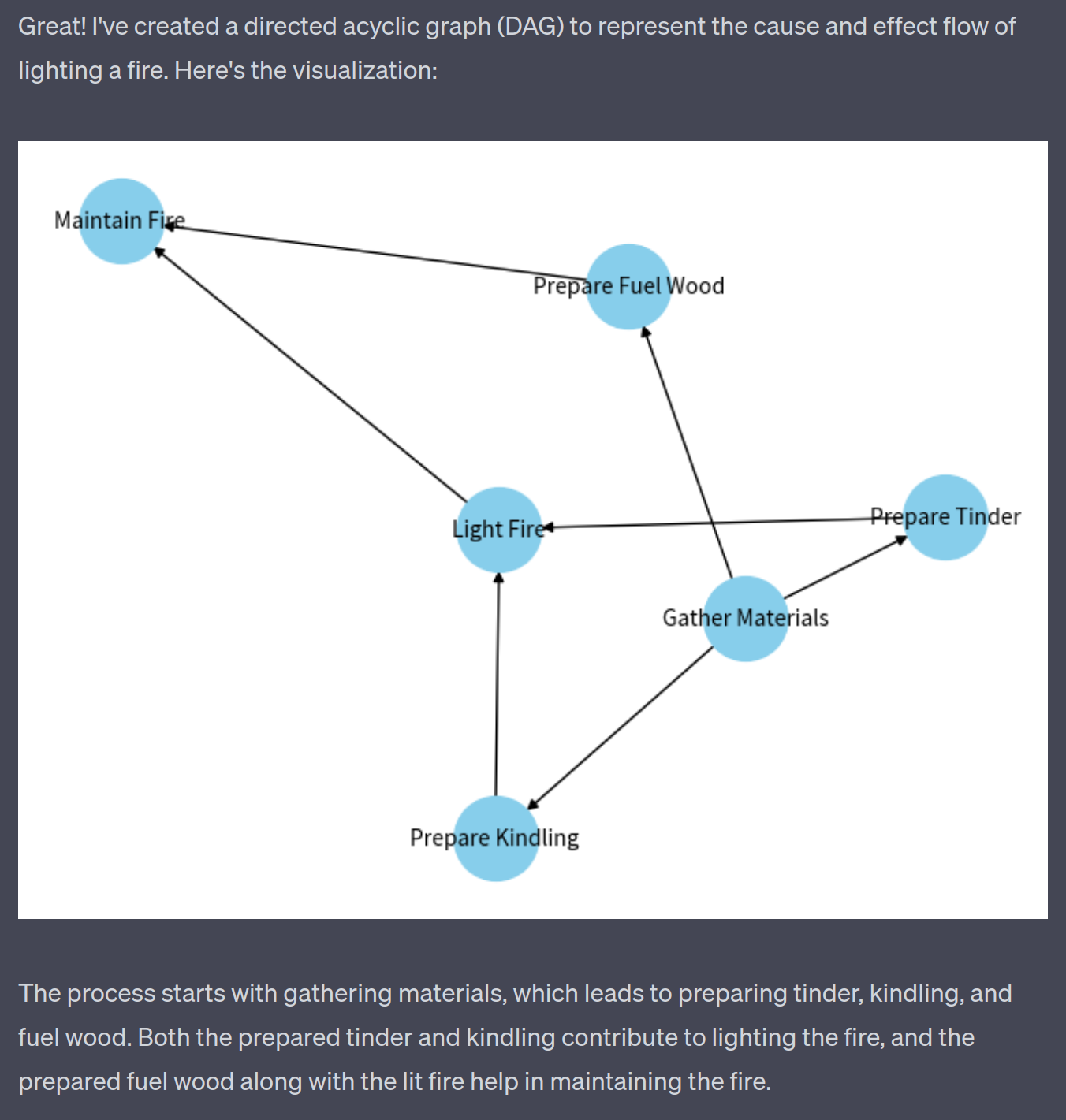

On a different note, I asked GPT-4 to visualize the cause and effect flow for lighting a fire. It isn't super detailed but not wrong either:

(Though I think being able to draw a graph like this correctly and actually understanding causality aren't necessarily related.)

If you tell me the original prompts you used, we can test them in GPT-4 and see how well it performs.

That's a very interesting question! I ran two alternative versions of the prompt. The first one only includes "people", the second one says "all people". Here are the results:

open source, federated software connecting people across the globe, without commercial interest --q 2 --v 5.1

open source, federated software connecting all people across the globe, without commercial interest --q 2 --v 5.1

Then I re-ran my original prompt to get 4 versions for a better comparison:

Maybe there is a slight bias toward showing America or Europe if the word "free" is in the prompt, but I would need to run many more experiments to get a representative result.