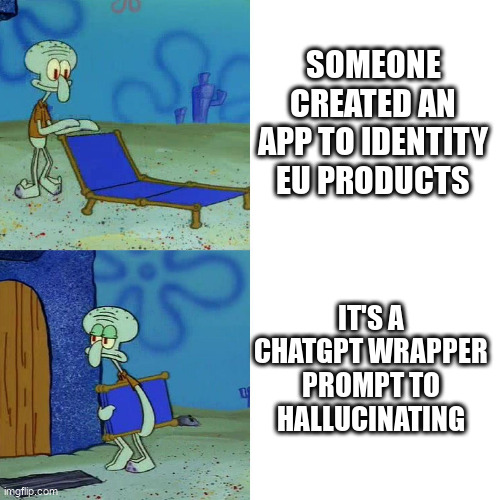

> Boycott US Products

> Uses ChatGPT

Buy European

Overview:

The community to discuss buying European goods and services.

Rules:

-

Be kind to each other, and argue in good faith. No direct insults nor disrespectful and condescending comments.

-

Do not use this community to promote Nationalism/Euronationalism. This community is for discussing European products/services and news related to that. For other topics the following might be of interest:

-

Include a disclaimer at the bottom of the post if you're affiliated with the recommendation.

-

No russian suggestions.

Feddit.uk's instance rules apply:

- No racism, sexism, homophobia, transphobia or xenophobia.

- No incitement of violence or promotion of violent ideologies.

- No harassment, dogpiling or doxxing of other users.

- Do not share intentionally false or misleading information.

- Do not spam or abuse network features.

- Alt accounts are permitted, but all accounts must list each other in their bios.

- No generative AI content.

Useful Websites

-

General BuyEuropean product database: https://buy-european.net/ (relevant post with background info)

-

Switching your tech to European TLDR: https://better-tech.eu/tldr/ (relevant post)

-

Buy European meta website with useful links: https://gohug.eu/ (relevant post)

Benefits of Buying Local:

local investment, job creation, innovation, increased competition, more redundancy.

European Instances

Lemmy:

-

Basque Country: https://lemmy.eus/

-

🇧🇪 Belgium: https://0d.gs/

-

🇧🇬 Bulgaria: https://feddit.bg/

-

Catalonia: https://lemmy.cat/

-

🇩🇰 Denmark, including Greenland (for now): https://feddit.dk/

-

🇪🇺 Europe: https://europe.pub/

-

🇫🇷🇧🇪🇨🇭 France, Belgium, Switzerland: https://jlai.lu/

-

🇩🇪🇦🇹🇨🇭🇱🇮 Germany, Austria, Switzerland, Lichtenstein: https://feddit.org/

-

🇫🇮 Finland: https://sopuli.xyz/ & https://suppo.fi/

-

🇮🇸 Iceland: https://feddit.is/

-

🇮🇹 Italy: https://feddit.it/

-

🇱🇹 Lithuania: https://group.lt/

-

🇳🇱 Netherlands: https://feddit.nl/

-

🇵🇱 Poland: https://fedit.pl/ & https://szmer.info/

-

🇵🇹 Portugal: https://lemmy.pt/

-

🇸🇮 Slovenia: https://gregtech.eu/

-

🇸🇪 Sweden: https://feddit.nu/

-

🇹🇷 Turkey: https://lemmy.com.tr/

-

🇬🇧 UK: https://feddit.uk/

Friendica:

-

🇦🇹 Austria: https://friendica.io/

-

🇮🇹 Italy: https://poliverso.org/

-

🇩🇪 Germany: https://piratenpartei.social/ & https://anonsys.net/

-

🇫🇷 Significant French speaking userbase: https://social.trom.tf/

-

🇵🇱 Poland: soc.citizen4.eu

Matrix:

-

🇬🇧 UK: matrix.org & glasgow.social

-

🇫🇷 France: tendomium & imagisphe.re & hadoly.fr

-

🇩🇪 Germany: tchncs.de, catgirl.cloud, pub.solar, yatrix.org, digitalprivacy.diy, oblak.be, nope.chat, envs.net, hot-chilli.im, synod.im & rollenspiel.chat

-

🇳🇱 Netherlands: bark.lgbt

-

🇦🇹 Austria: gemeinsam.jetzt & private.coffee

-

🇫🇮 Finland: pikaviestin.fi & chat.blahaj.zone

Related Communities:

Buy Local:

Continents:

European:

Buying and Selling:

Boycott:

Countries:

Companies:

Stop Publisher Kill Switch in Games Practice:

Banner credits: BYTEAlliance

Prompt to hallucinating?

Do you mean "Prone"?

That is the sort of mistake an Llm would make.

This is precisely the sort of mistake an LLM wouldn't make.

Just got distracted (also English isn't my first language)

Your native tongue is python, you're an LLM, sorry you had to find out this way.

Damn

I hope you're not installing these on your phone...?

Definitely not

Devs are aware. This was a quick n dirty prototype and they alright knew the issue with using chatgpt. They did it to make something work asap. In an interview (Danish) the devs recognized this and is moving toward using a LLM developed in French (I forget the name but irrelevant to the point that they will drop chatgpt).

If that's their solution, then they have absolutely no understanding of the systems they're using.

ChatGPT isn't prone to hallucination because it's ChatGPT, it's prone because it's an LLM. That's a fundamental problem common to all LLMs

phi-4 is the only one I am aware of that was deliberately trained to refuse instead of hallucinating. it's mindblowing to me that that isn't standard. everyone is trying to maximize benchmarks at all cost.

I wonder if diffusion LLMs will be lower in hallucinations, since they inherently have error correction built into their inference process

Even that won't be truly effective. It's all marketing, at this point.

The problem of hallucination really is fundamental to the technology. If there's a way to prevent it, it won't be as simple as training it differently