Regarding linguistics, the usage of machine "learning" models feels in some cases justified. Often you have that huge amount of repetitive data that you need to sort out and generalise, M"L" is great for that.

For example, let's say that you're studying some specific vowel feature. You're probably recording the same word thrice for each informer; and there's, like, 15 words? You'll want people from different educational backgrounds, men and women, and different ages, so let's say 10 informers. From that you're already dealing with 450 recordings, that you'll need to throw into Praat, identify the relevant vowel, measure the average F₁ and F₂ values.

It's an extremely simple task, easy to generalise, but damn laborious to perform by hand. That's where machine learning should kick in; you should be able to teach it "this is a vowel, look those smooth formants, we want this" vs. "this is a fricative, it has static-like noise, disregard it", then feed it the 450 audio files, and have it output F1 and F2 values for you in a clean .csv

And, if you're using it to take the conclusions for you, you probably suck as a researcher.

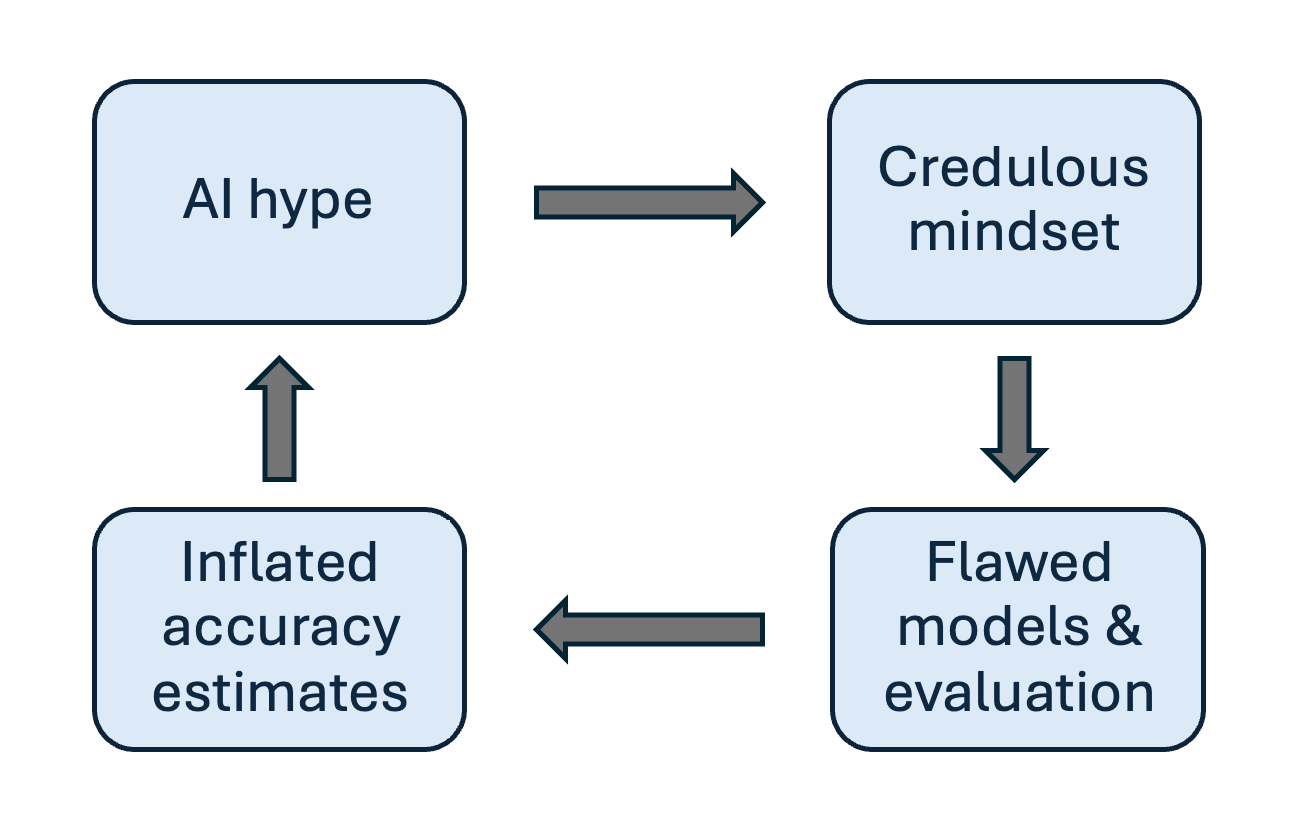

This picture from the link is amazing:

And exemplified by the very discussion in "Reddit LARPs as h4x0rz @ ycombinator dot com". Specially the top right, the sheer amount of users there stinking credulousness is funny.

Onwards I'll copypaste a few HN comments. I'll use the same letter for the same user, and numbers to tell their comments apart when necessary.

[A1] I feel like 90% of AI discussions online these days can be shut down with “a probabilistic syllable generator is not intelligence”

I also feel so. But every time that you state the obvious, you'll get a crowd of functionally illiterate and irrational people disputing the obvious, and their crowds are effectively working like a big moustached "MRRROOOOOOO"-ing sealion.

[B, answering A1] How do you define intelligence?

That user is playing the "dancing chairs" game, with definitions. If you do provide a definition, users like this are prone to waste your time chain-gunning "ackshyually" statements. Eventually evolving it into appeal to ignorance + ad nauseam.

[C1, answering A1] Humans are not fact machines, we are often wrong. Do humans not have intelligence?

[A2, answering C1] Like clockwork, out come the "but humans" deflections. An LLM is not a human-like intelligence. This is patently obvious, such comparisons are nonsensical and just further the problem of people anthropomorphizing a tool and treating it like an oracle.

A is calling C1 a deflection. I'd go further: it's whataboutism plus extended analogy. They're simply taking the "they're like humans" analogy as if it was more than a simple analogy.

[C2, answering A2] You didn't answer the question [in C1].

"I demand you to bite my whataboutism!".

A2 actually answers C1, by correctly pointing out that the question is nonsensical.

[A3, answering C2] I did, I said they aren't human-like intelligences, so countering with "humans make mistakes, are humans not intelligent?" is drawing a false equivalence between humans and LLMs. // Since we do not possess a definition of intelligence that isn't human-like, it would be meaningless to argue if LLMs are intelligent in general. All that can be said is that they are not intelligent in the way that humans are.

At this rate A bite the bait - "All that can be said is that they are not intelligent in the way that humans are." opens room for "ackshyually" and similar idiocies.

[D, another comment chain] But surely if it's artificial intelligence then it'd know its limits and would respond appropriately? Oracle use no problem? // It is it because it's actually shit but it's the best thing we've seen yet and everyone is just in denial?

It is not shit if you know what to use it for. ...but then even shit can become fertiliser.

Serious now, the faster you ditch the faith that machine "learning" is intelligent, the faster you find things that it does in a non-shitty way. Myself listed one example.

[E, replying to D] People constantly misevaluate their own limits though. Why should AI not be allowed to do that?

Whataboutism, again; and the same one, that boils down to "I dun unrrurstand, but wharabout ppl doin mistakes? I is confusion!". Are you noticing the pattern?

Answering the question: AI should not "be allowed" to do that for the same reason why a screwdriver should not "be allowed" to let screws slip. Because it's a tool malfunctioning in a way that it interferes with what people use it for.

[F, another comment chain] Is "leakage" just another term for overfitting?

Not quite. I'll use a human like analogy, but bear in mind that this is solely a didactic example, and that analogies break if pushed too far.

- Overfitting - what conspiracy "theorists" do, associating random things that have nothing to do with each other as if they were related.

- Leakage - when a student excels in an exam not because they understood the topic well, but because they trained themselves to solve that exam.

In other words:

- Overfitting is caused by excessive amounts of spurious data being added to the model, increasing the likelihood of spurious associations

- Leakage is caused by the insertion of accurate data that does not allow the model to develop the associations that it's supposed to do