the_dunk_tank

It's the dunk tank.

This is where you come to post big-brained hot takes by chuds, libs, or even fellow leftists, and tear them to itty-bitty pieces with precision dunkstrikes.

Rule 1: All posts must include links to the subject matter, and no identifying information should be redacted.

Rule 2: If your source is a reactionary website, please use archive.is instead of linking directly.

Rule 3: No sectarianism.

Rule 4: TERF/SWERFs Not Welcome

Rule 5: No ableism of any kind (that includes stuff like libt*rd)

Rule 6: Do not post fellow hexbears.

Rule 7: Do not individually target other instances' admins or moderators.

Rule 8: The subject of a post cannot be low hanging fruit, that is comments/posts made by a private person that have low amount of upvotes/likes/views. Comments/Posts made on other instances that are accessible from hexbear are an exception to this. Posts that do not meet this requirement can be posted to !shitreactionariessay@lemmygrad.ml

Rule 9: if you post ironic rage bait im going to make a personal visit to your house to make sure you never make this mistake again

view the rest of the comments

I really don't understand what you mean by this. It sounds like a very inefficient way to use controlnet. What settings would you be tweaking with an llm? Why would you use an llm for that, instead of a slider/checkbox?

I wouldn't do it lol. But you could create a workflow in which you could make similar products using only prompts and some custom tooling.

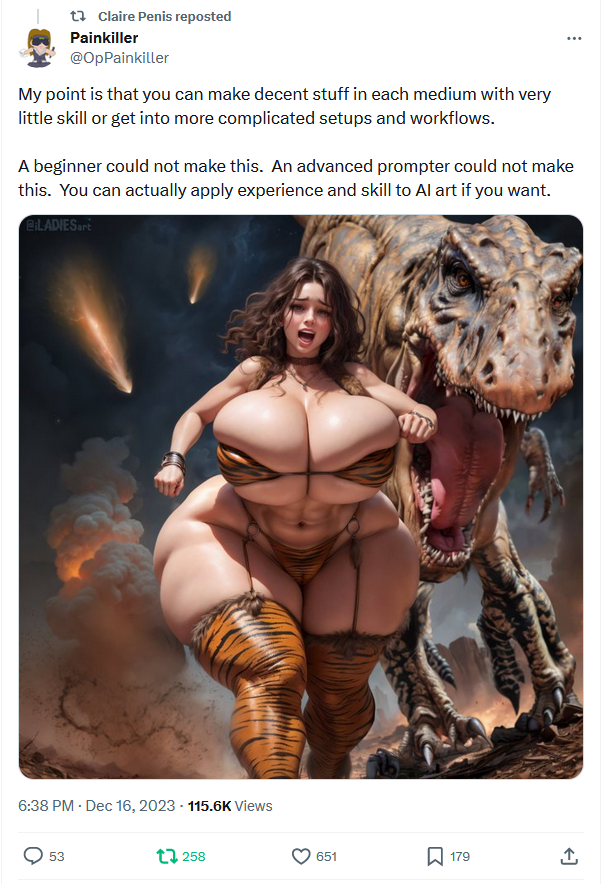

The creator of this piece said that it can't be made by a prompt. What they are insinuating, is that different inputs make their outputs more or less superior. But that's such an arbitrary and ignorant argument when you come at it from the view of LLM product design.

It used to be that parameters were input at the command line. Obviously this becomes impractical for mature use cases, so UI frontends were created to give us sliders and checkboxes. That matches the kind of environments that they are already using. But these are also typically power users (by interest, not necessarily competency) and we've seen a new iteration of UI designs for consumer LLM products like Dall-E and Midjourney. Just because the LLM has a user-friendly skin does not mean the functionality is any less capable - you can pass parameters in prompts, for example.

But if you were to have a use case for a product that uses ControlNet with only prompts, like I said, it would benefit from extra tooling: Presets, libraries, defaults, etc. With these in place more powerful functionality would be more quickly accessible through the prompt format. I'm not Nostradamus, but one area in which simpler inputs are much more desirable than power user dashboards, is in products that are intended to be used by drivers (not all of which are related to the act of driving itself - like voice2text messaging).

I guess my point is that the argument that "my sliders are better than your prompt" is like saying the back door gets you into the house better than the front door, it's really nothing more than a schoolyard pissing contest that shows a limited perspective on the matter.