this post was submitted on 08 Oct 2023

219 points (93.3% liked)

2187 readers

1 users here now

A Lemmy community dedicated to Google products and everything Google.

Rules

- Keep it Google.

- Keep it SFW.

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

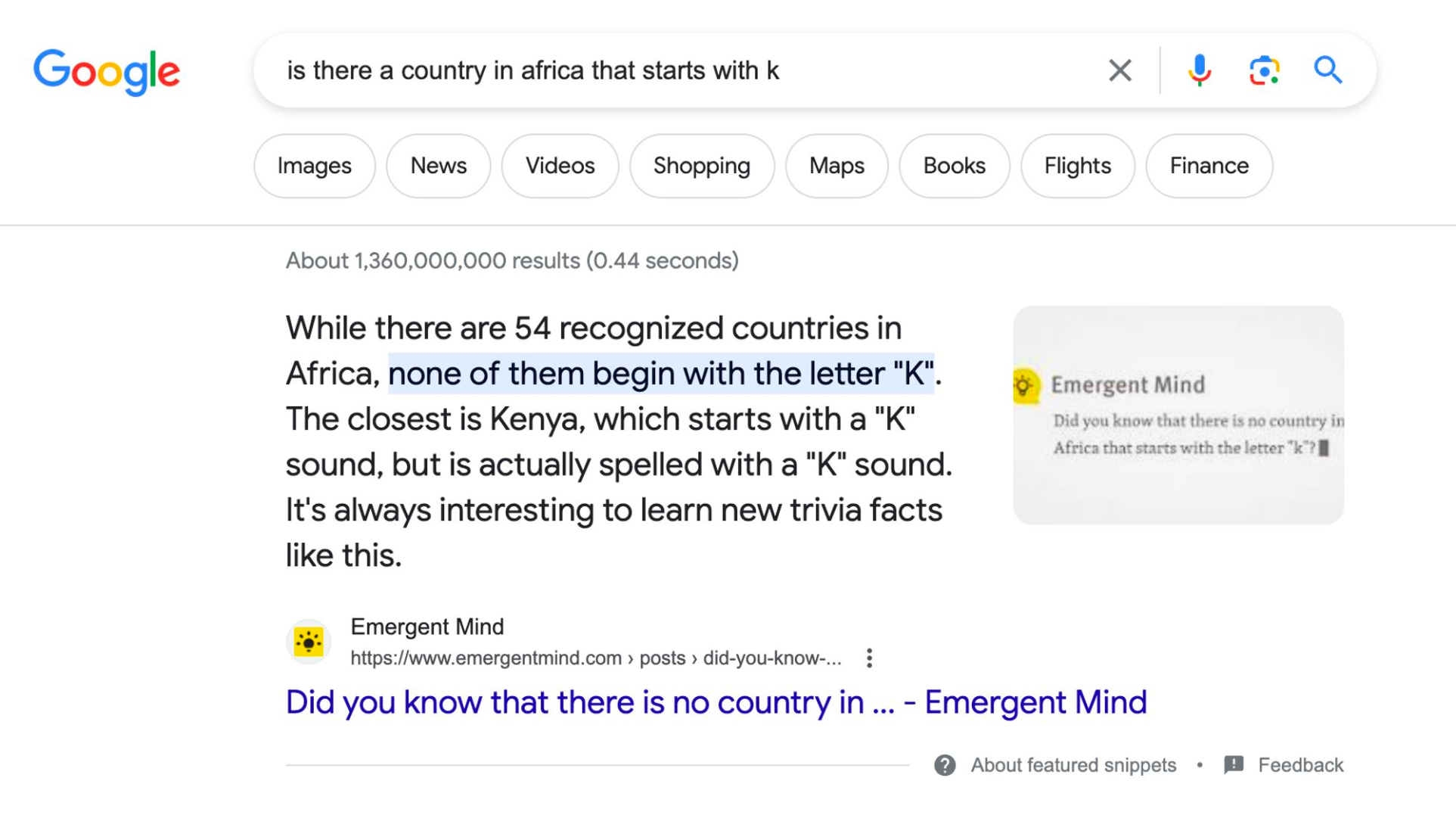

Everyone who's actually worked a real job knows it's better for someone to not do a job at all than to do it 75% right.

Because now that you know the LLM is getting basic information wrong, you can't trust that anytime it produced is correct. You need to spend extra time fact-checking it.

LLMs like Bard and ChatGPT/GPT3/3.5/4 are great at parsing questions and making results that sound good, but they are awful at giving correct answers.