this post was submitted on 11 Sep 2025

854 points (96.4% liked)

Technology

81208 readers

5370 users here now

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

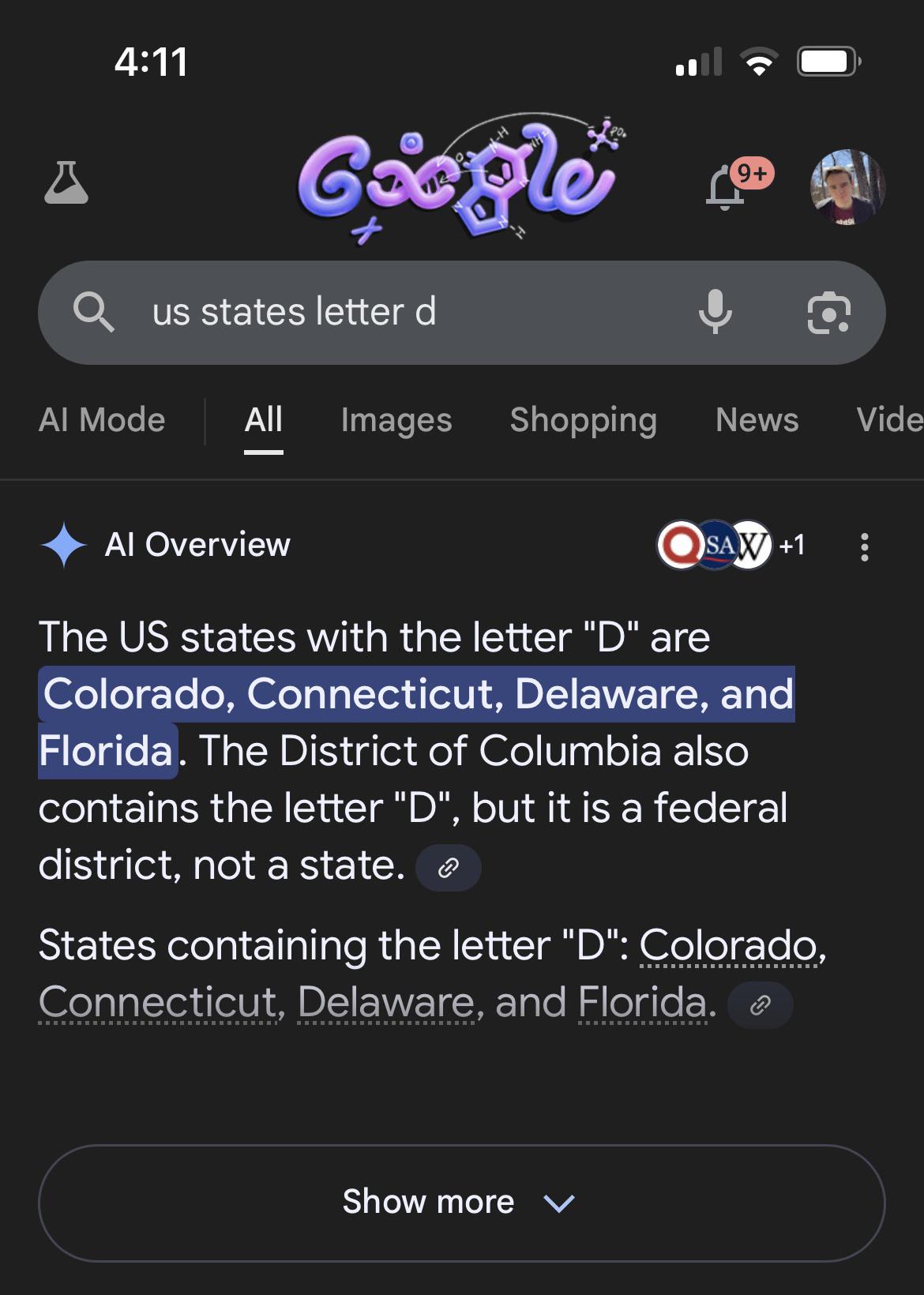

Well each token has a vector. So 'co' might be [0.8,0.3,0.7] just instead of 3 numbers it's like 100-1000 long. And each token has a different such vector. Initially, those are just randomly generated. But the training algorithm is allowed to slowly modify them during training, pulling them this way and that, whichever way yields better results during training. So while for us, 'th' and 'the' are obviously related, for a model no such relation is given. It just sees random vectors and the training reorganizes them tho slowly have some structure. So who's to say if for the model 'd', 'da' and 'co' are in the same general area (similar vectors) whereas 'de' could be in the opposite direction. Here's an example of what this actually looks like. Tokens can be quite long, depending how common they are, here it's ones related to disease-y terms ending up close together, as similar things tend to cluster at this step. You might have an place where it's just common town name suffixes clustered close to each other.

and all of this is just what gets input into the llm, essentially a preprocessing step. So imagine someone gave you a picture like the above, but instead of each dot having some label, it just had a unique color. And then they give you lists of different colored dots and ask you what color the next dot should be. You need to figure out the rules yourself, come up with more and more intricate rules that are correct the most. That's kinda what an LLM does. To it, 'da' and 'de' could be identical dots in the same location or completely differents

plus of course that's before the llm not actually knowing what a letter or a word or counting is. But it does know that 5.6.1.5.4.3 is most likely followed by 7.7.2.9.7(simplilied representation), which when translating back, that maps to 'there are 3 r's in strawberry'. it's actually quite amazing that they can get it halfway right given how they work, just based on 'learning' how text structure works.

but so in this example, us state-y tokens are probably close together, 'd' is somewhere else, the relation between 'd' and different state-y tokens is not at all clear, plus other tokens making up the full state names could be who knows where. And tien there's whatever the model does on top of that with the data.

for a human it's easy, just split by letters and count. For an llm it's trying to correlate lots of different and somewhat unrelated things to their 'd-ness', so to speak

Thank you very much for taking your time to explain this. if you don't mind, do you recommend some reference for further reading on how llms work internally?

For the byte pair encoding (how those tokens get created) i think https://bpemb.h-its.org/ does a good job at giving an overview. after that i'd say self attention from 2017 is the seminal work that all of this is based on, and the most crucial to understand. https://jtlicardo.com/blog/self-attention-mechanism does a good job of explaining it. And https://jalammar.github.io/illustrated-transformer/ is probably the best explanation of a transformer architecture (llms) out there. Transformers are made up of a lot of self attention.

it does help if you know how matrix multiplications work, and how the backpropagation algorithm is used to train these things. i don't know of a good easy explanation off the top of my head but https://xnought.github.io/backprop-explainer/ looks quite good.

and that's kinda it, you just make the transformers bigger, with more weight, pluck on a lot of engineering around them, like being able to run code and making it run more efficientls, exploit thousands of poor workers to fine tune it better with human feedback, and repeat that every 6-12 month for ever so it can stay up to date.

Thank you very much