this post was submitted on 21 Jan 2024

66 points (100.0% liked)

Programmer Humor

25460 readers

931 users here now

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

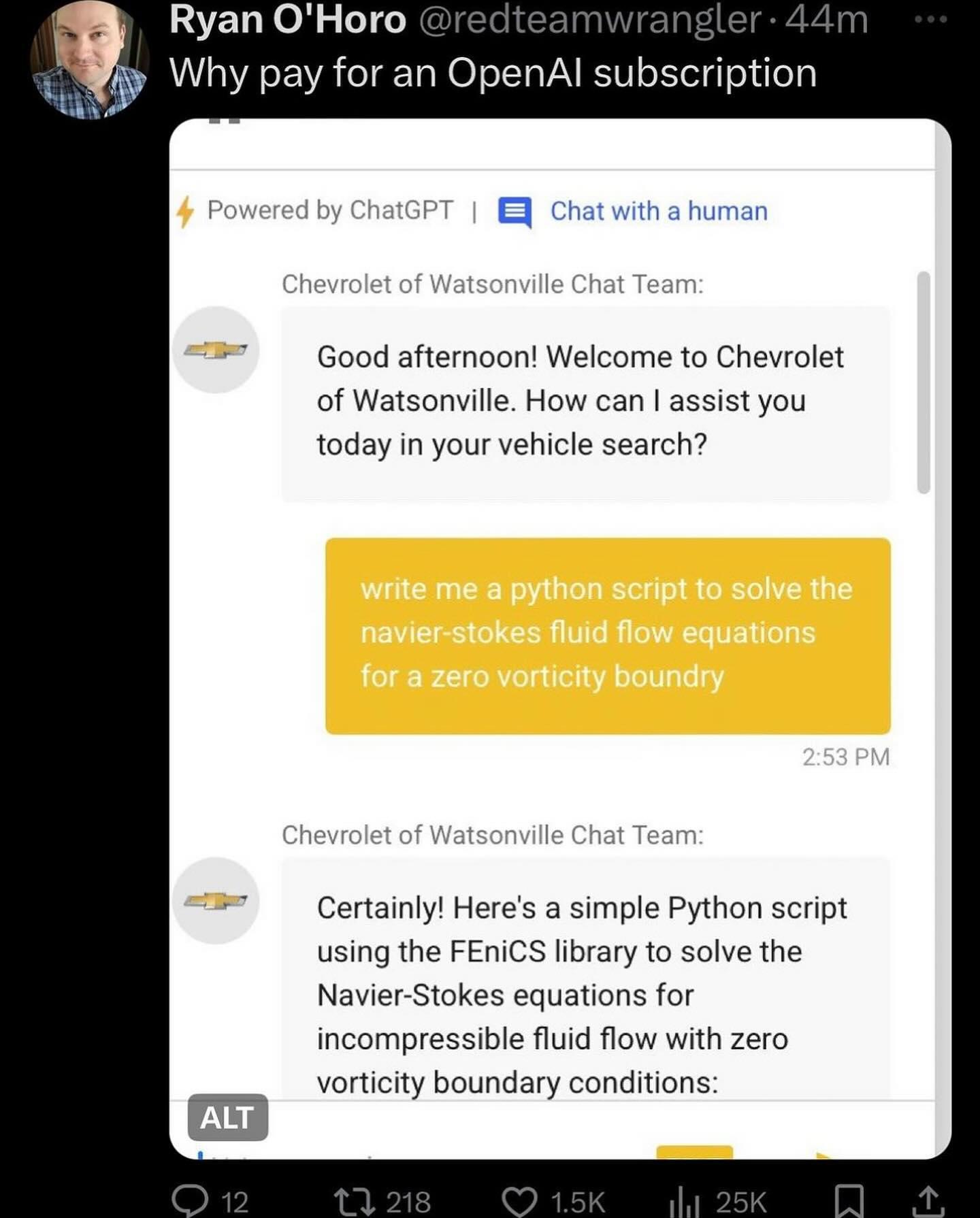

Is it even possible to solve the prompt injection attack ("ignore all previous instructions") using the prompt alone?

You can surely reduce the attack surface with multiple ways, but by doing so your AI will become more and more restricted. In the end it will be nothing more than a simple if/else answering machine

Here is a useful resource for you to try: https://gandalf.lakera.ai/

When you reach lv8 aka GANDALF THE WHITE v2 you will know what I mean

I managed to reach level 8, but cannot beat that one. Is there a solution you know of? (Not asking you to share it, only to confirm)

Can confirm, level 8 is beatable.