this post was submitted on 11 Oct 2024

817 points (98.1% liked)

Programmer Humor

32410 readers

1 users here now

Post funny things about programming here! (Or just rant about your favourite programming language.)

Rules:

- Posts must be relevant to programming, programmers, or computer science.

- No NSFW content.

- Jokes must be in good taste. No hate speech, bigotry, etc.

founded 6 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

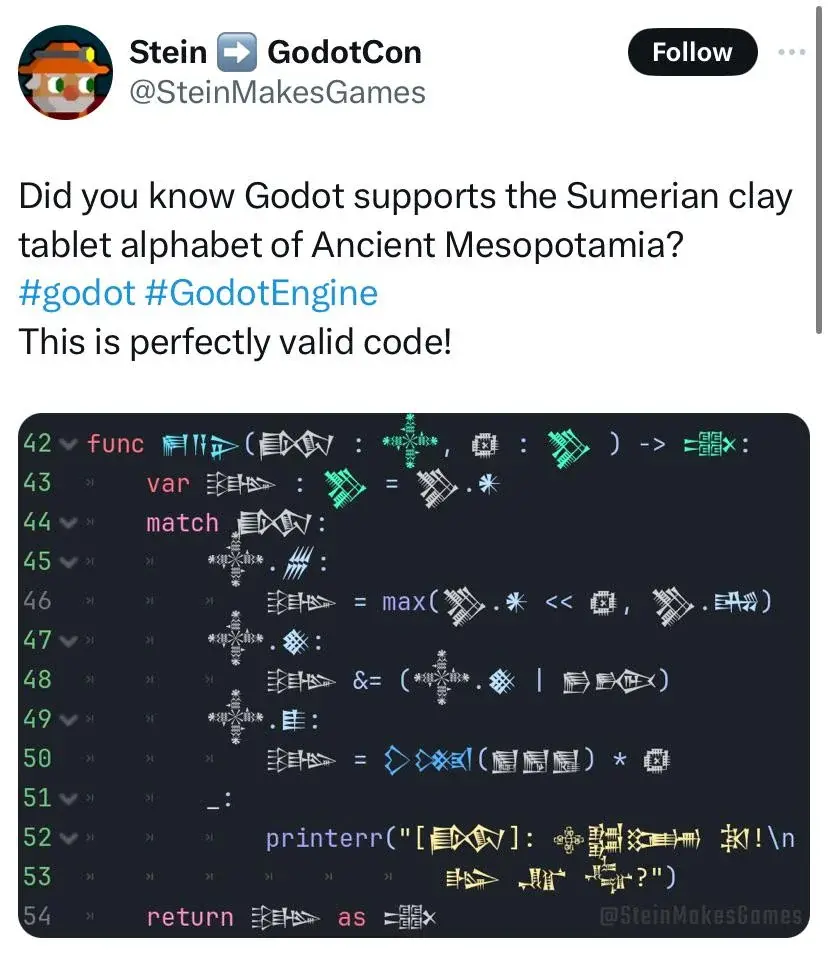

Yes, but the language/compiler defines which characters are allowed in variable names.

I thought the most mode sane and modern language use the unicode block identification to determine something can be used in valid identifier or not. Like all the 'numeric' unicode characters can't be at the beginning of identifier similar to how it can't have '3var'.

So once your programming language supports unicode, it automatically will support any unicode language that has those particular blocks.

Sanity is subjective here. There are reasons to disallow non-ASCII characters, for example to prevent identical-looking characters from causing sneaky bugs in the code, like this but unintentional: https://en.wikipedia.org/wiki/IDN_homograph_attack (and yes, don't you worry, this absolutely can happen unintentionally).

OCaml’s old m17n compiler plugin solved this by requiring you pick one block per ‘word’ & you can only switch to another block if separated by an underscore. As such you can do

print_แมวbut you couldn’t dopℝint_c∀t. This is a totally reasonable solution.That's pretty cool