this post was submitted on 27 Jun 2024

388 points (96.6% liked)

Comic Strips

19338 readers

721 users here now

Comic Strips is a community for those who love comic stories.

The rules are simple:

- The post can be a single image, an image gallery, or a link to a specific comic hosted on another site (the author's website, for instance).

- The comic must be a complete story.

- If it is an external link, it must be to a specific story, not to the root of the site.

- You may post comics from others or your own.

- If you are posting a comic of your own, a maximum of one per week is allowed (I know, your comics are great, but this rule helps avoid spam).

- The comic can be in any language, but if it's not in English, OP must include an English translation in the post's 'body' field (note: you don't need to select a specific language when posting a comic).

- Politeness.

- AI-generated comics aren't allowed.

- Adult content is not allowed. This community aims to be fun for people of all ages.

Web of links

- !linuxmemes@lemmy.world: "I use Arch btw"

- !memes@lemmy.world: memes (you don't say!)

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

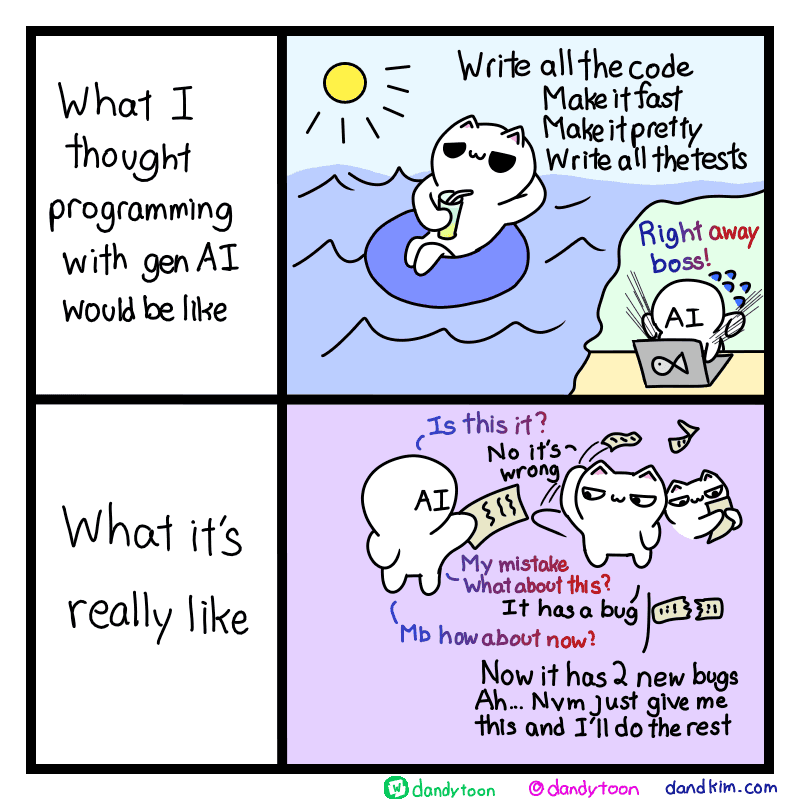

People really should remember: generative AI makes things things that look like what you want.

Now, usually that overlaps a lot with what you actually want, but not nearly always, and especially not when details matter.

It also isn't telepathic, so the only thing it has to go on when determining "what you want" is what you tell it you want.

I often see people gripe about how ChatGPT's essay writing style is mediocre and always sounds the same, for example. But that's what you get when you just tell ChatGPT "write me an essay about X." It doesn't know what kind of essay you want unless you tell it. You have to give it context and direction to get good results.

Not disagreeing with you at all, you made a pretty good point. But when engineering the prompt takes 80% of the effort that just writing the essay (or code for that matter) would take, I think most people would rather write it themselves.

Sure, in those situations. I find that it doesn't take that much effort to write a prompt that gets me something useful in most situations, though. You just need to make some effort. A lot of people don't put in any effort, get a bad result, and conclude "this tech is useless."

We are all annoyed at clients for not saying what they actually want in a Scope of Works, yet we do the same to LLM thinking it will fill in the blanks how we want it filled in.

Yet that's usually enough when taking to another developer.

The problem is that we have this unambiguous language that is understood by human and a computer to tell computer exactly what we want to do.

With LLM we instead opt to use a natural language that is imprecise and full of ambiguity to do the same.

You communicate with co-workers using natural languages but that doesn't make co-workers useless. You just have to account for the strengths and weaknesses of that mechanism in your workflow.